Introduction

Prompt engineering is the process of creating a prompt such that the LLM knows exactly what to do and how it should do it. The more specific and effective the prompt is, the better the LLM can consistently complete the task it was given. The issue is, with all of these fancy Large Language Models that have the sole purpose of automating human natural language, why are we still manually generating these prompts? Why can’t the LLM prompt itself? The answer: it can. In fact, there are many ways to do it already.

The process of creating a prompt

To understand how an LLM can prompt itself, let's talk about what makes a prompt a good prompt. There are several techniques to develop a good prompt, and depending on what kind of task you want the LLM to do, you may want a variety of the following techniques. We will go into more detail about all of these after.

- Specific Information -- The model isn’t a magician, it can’t predict exactly what you want. You need to be thorough with your specifications so that the model can complete your task as best as possible. Of course, if you want your model to produce more creative outputs, live if you want it to generate ideas or a story, then you won’t need to do as much of this.

- Examples of successful outputs -- This is a powerful technique, and is more technically called, “few shot prompting.” Again, it is a technique that is not suitable for all tasks but is great when you want your LLM to have complex behavior that it hasn’t seen before. It is often used when you want a certain type of response format or want it to strictly follow certain rules since LLMs are great at replicating what it has seen. This is usually done by adding a separate example section in the prompt and then showing what the output should be given as an example input.

- Chain of thought (CoT) -- “Chain of thought” means explaining the thought process behind an answer, before actually giving the answer. It has shown that this is incredibly effective for logical reasoning, and makes things like multi-step math problems much easier for the LLM. The simplest way of achieving this is by adding the line “let’s think this out step by step,” at the end of your prompt.

- Planning -- This isn’t used much in human prompting, but it is invaluable for LLM prompting itself due to how easy it is to implement and its effectiveness. It is very similar to CoT, but instead of the model explaining the steps as it generates the output, the steps are already outlined in the prompt. The prompt outlines the general process the LLM needs to do to get the output. This works great for problems that have many steps. In fact, sometimes each step in the plan will get its own prompt in order to ensure that the LLM completely finishes each step.

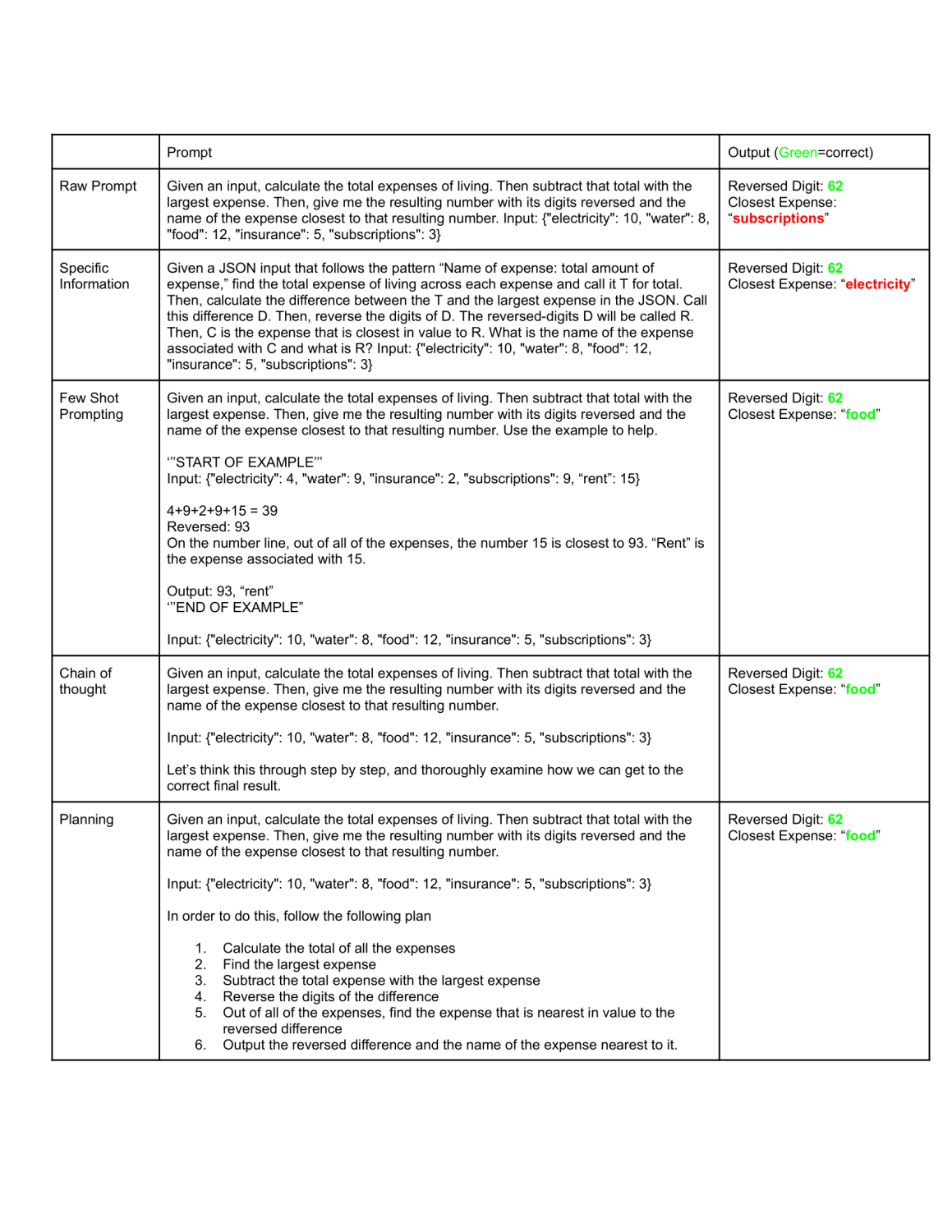

In order to illustrate the usefulness of these techniques, let’s see them all in action. We are going to give a purposefully confusing task (which can be seen in the “raw prompt”) and see how our techniques may be able to make the task more clear and easier for the model. The output column shows if the two outputs asked of the model are correct or not.

The results of each technique are quite promising. They were all able to do the first few steps, but the original prompt fell short when asked to find the closest number. Specific information also failed, but that was more because this prompt was more of a logical issue and does not benefit as much from specific information. I still tried my best to illustrate how the prompt could be more concise, and it did get a closer answer. On the other hand, the next three were all able to make it produce the correct result.

Prompt LLMs with LLMs

Now that we know some of the key techniques that we can leverage for prompt creation, how can we make it so that the LLM can create such a prompt? Well, the answer is simple: More prompting!

Out of the four techniques discussed above, the easiest one to implement is planning. Just simply give the LLM a prompt and ask it to create a plan for that prompt. Then combine the plan with the original prompt, and we got ourselves a super-charged prompt. Let’s take the example, “Give me an itinerary for my trip to New York.”

Step 1: Ask the LLM for the plan ( https://chat.openai.com/c/679963a0-8c38-4e9e-a80a-945b6a3206e7 )

Given a prompt, generate a 3-5 step plan to follow in order to complete the task given by the prompt. Here is the prompt: Give me an itinerary for my trip to New York.

Step 2: Add the plan to the original prompt

Give me an itinerary for my trip to New York. In order to do so, follow these steps: **insert plan here**

Another way to use planning is to separate each step of the plan into its own prompt, and then run the model multiple times. This is especially useful for lengthy tasks such as multi-step research tasks or math word problems.

Step 1. Ask the LLM for the plan (same as above)

Step 2. Ask the LLM to do the first step of the plan.

Step 3. Take the output from the first step and add it to the prompt. Then ask it to complete the second step

Step 4: Repeat until all steps have been completed

First, we simply ask for the plan. Then, instead of asking the LLM to do the entire plan all at once, we ask it to only perform the first step. This is nice because the LLM is now contained within the first step so it won't try to think about the next steps and wouldn’t confuse itself at once. Think of it like taking bites out of a hamburger instead of stuffing the entire into your mouth. After the model does the first step, we move on to the second step. Except this time, we include the response of the first step so that the model has whatever information it generated in the first step for the next step. This process is repeated until all steps are completed.

The second way we can make an LLM prompt itself is by making it come up with its own successful examples. This may not necessarily increase the effectiveness like the previous method did, but it will increase predictability . The model creating a predictable output is very important if you are going to be parsing that output with code. An example of making the model predictable is making it output its data in a JSON format so that a programming language can then parse and digest that data.

In order to do this, we simply ask the model to rewrite our prompt with an example. Here is an example of something that could work:

First, create ask the LLM to add an example to our prompt.

Given the following prompt, rewrite the prompt but also include one successful

completion of the prompt given a character where a JSON with three pieces of

data are included: Family, Friends, and Enemies

The prompt: Your job is to find data about fictional characters.

Then, you can just use the prompt the LLM gave. For example, ChatGPT produced the following.

Your task is to gather information about fictional characters and present the data in JSON format, including details about their Family, Friends, and Enemies. Here's an example of how the data should be structured

Example JSON for a fictional character named "John Doe":

json

{

"Name": "John Doe",

"Occupation": "Detective",

"Age": 35,

"Family": {

"Spouse": "Jane Doe",

"Children": ["Alex Doe", "Emily Doe"]

},

"Friends": ["Sarah Johnson", "Mike Smith"],

"Enemies": ["The Red Scorpion Gang"]

}

Please provide JSON data for other fictional characters following the same structure as the example.:

Now, we can be assured that every time the prompt is used, we would have the exact same keys in our JSON. Without an example, the model may randomly add or remove a key in the JSON making it hard to parse with code.

Pre-existing AI prompting solutions

Unsurprisingly, several auto-prompting projects have arisen. One of them is AutoGPT. AutoGPT is described as an “Autonomous GPT 4 agent,” and is capable of completing any generic goal or a series of goals defined by the user by using GPT4.

AutoGPT uses a variant of the automated planning outlined earlier. Given a task, such as, “Research information about the 34th president of the US and write your results on a text file,” it will generate the steps it needs to take to succeed in it. For this, the first step might be, “Google the 34th president.”

AutoGPT also has many other features such as web search, short-term and long-term memory, the ability to handle file operations, and much more. However, in the end, once the user gives it its goal, it is simply GPT4 communicating with GPT4. In fact, for each step, GPT4 is the one that decides when the task has been completed. This can lead to problematic circumstances where the bot continues infinitely because it is unable or doesn’t want to move on to the next task, therefore never actually finishing.

There are also several services that give you the ability to do this kind of prompting. Structured Prompt is an application that allows you to generate your own specialized TRACI (task, role, audience, create, intent) prompt. They basically boil the prompt down into 5 questions that must be answered about the prompt, like “What is your task?”, “Who is your audience?”, “What is the role of the LLM?”, etc.

Although I can’t confirm whether this method is actually effective, it is a novel way of creating a prompt based on a structured philosophy. In addition to this, they have a way of creating these kinds of prompts using generative AI. This means that you can just give it your regular prompt, and it will generate the TRACI prompt for you.

My own prompt engineer

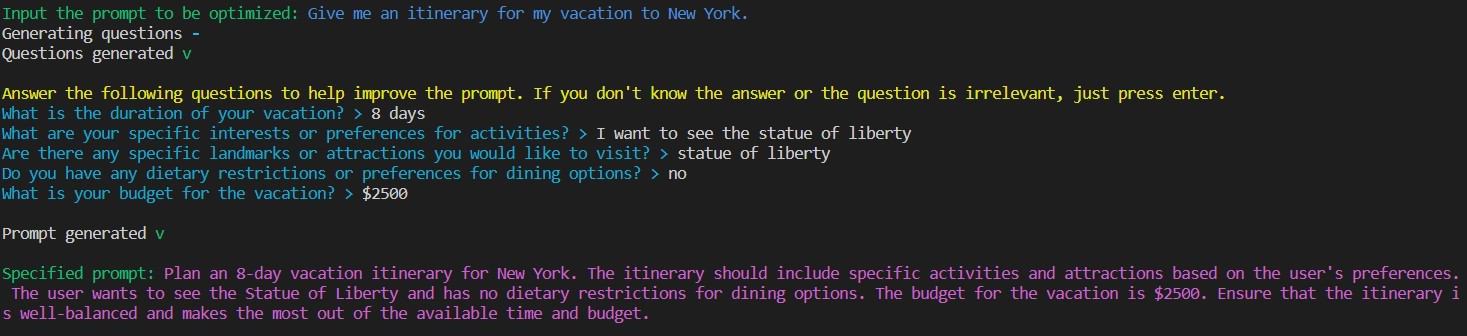

This article was inspired by a small application that I had made in order to make prompting easier. The idea was that given a prompt, the program would generate questions about the prompt and use those answers to make the prompt more specific, therefore getting rid of any ambiguous information that the LLM would have to guess. Then, it would also generate a plan for the LLM to follow to make sure that it would work best. The code is a bit too long for me to create a tutorial here, but I will showcase how it works. Let’s take the example, “Give me an itinerary for my vacation to New York.”

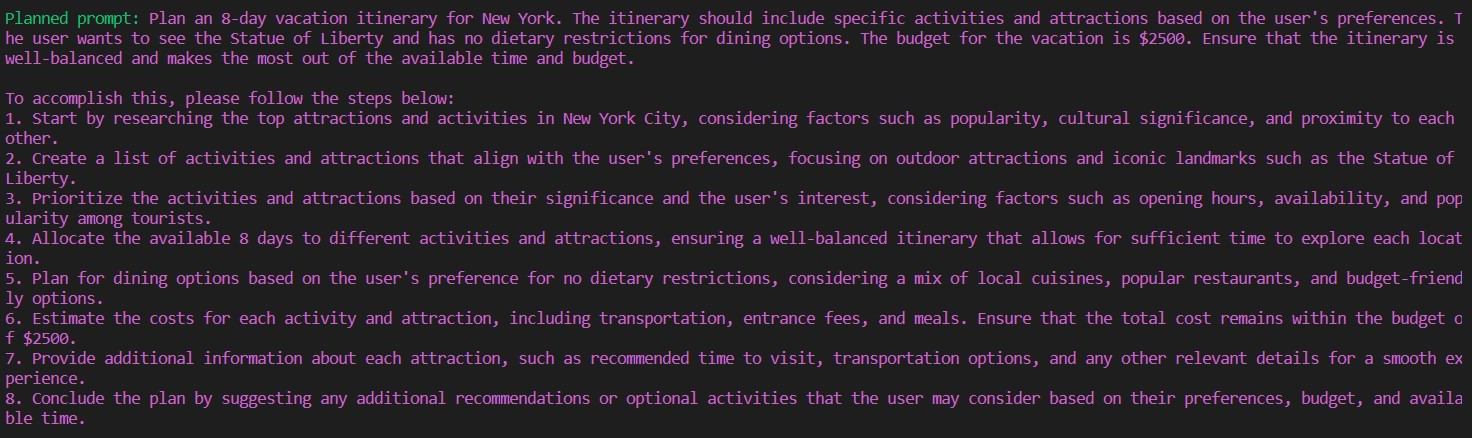

After it makes the specified prompt, it then generates a plan for the LLM to follow.

You can see the output of the original prompt here and the output of the new prompt here . As you can see, the new prompt is much more specialized towards the user’s needs. It gets the correct amount of days and includes advice about transportation and food.

The way this works is very simple too. It just asks ChatGPT for a couple of questions that relate to the prompt, and then uses those answers to create a better prompt as well as a plan. You can try it out yourself by downloading the code on github .

Conclusion

LLMs will continue to get more integrated into our everyday applications. However, in order to use them we can just use regular ol’ code, we need to come up with prompts in our natural language to make the LLM work. As such, prompt engineering has become a big part of using LLMs and will continue to be, since although LLMs are great at natural language, they aren't mind readers. To make a good prompt, you need to be sure to be very specific about your requirements in order to get the best result possible. But why go through that headache, when you can just ask the LLM to do it for you?

Even with all that being said, as time goes on these models will get more powerful. Less of this prompting will be needed since better models can guess or even ask for more information from the user. But bigger and better models aren't necessarily a good thing. Bigger models will need beefier hardware and more electricity, meaning higher API costs. Even now, GPT3.5 is preferable over GPT4 due to price. This all leads me to one big point: Better prompts mean we can get away with weaker LLMs means we can save money. And we all want to save money.