All Articles

Article List

Large language models are being used everywhere. Since the release of OpenAI’s ChatGPT, a domino effect occurred causing everyone to start integrating AI into their own systems and workflow. Although only a short time has passed, many complex apps and technologies are being developed with this technology baked into it. However, even with all this innovation, we have only scratched the surface of how much artificial intelligence can disrupt the industry.

MedGPT is a product of this never-ending innovation -- it is a chatbot capable of diagnosing illness and suggesting remedies. It can mimic a conversation between a patient and a doctor as if you were actually standing in a doctor’s office yourself, and give expert level medical advice. It uses medical textbooks and online forums managed by MDs in order to present the most accurate and source-backed data.

Exposing MedGPT to medical data with embeddings

A big problem with LLMs like OpenAI’s ChatGPT is that the query can only contain a limited amount of tokens. This means that if you have a large database, you can’t just cram all the data into the query for ChatGPT to use. Instead, you have to find a smart way to piece together the most relevant segments of data based on the query the user provides. In order to solve this, we can use a semantic word encoding technique called embeddings.

Embeddings are a way to express the meaning of a collection of sentences in a mathematical way. This allows for the computer to find how similar two pieces of text are based on their internal meaning. This is extremely beneficial when paired with LLMs. For example, let's say you have a three-hundred page book and you want to have an LLM intelligently answer questions about the book. Unfortunately, you can’t just give the entire book for the LLM to read -- the book is far too long. Using embeddings however, you can find the text in the book which is most similar to the query. This allows you to selectively give only the most relevant text to the LLM, which can then process it and answer the question. For example, if you asked something about a character’s appearance, it can find every paragraph in which that character is described.

For MedGPT specifically, embeddings unlock the ability to store an enormous amount of medical knowledge. Whenever you have a query, it will sift through that giant database and find the information you need. Then, ChatGPT will intelligently formulate a response to mimic a doctor giving you advice.

The source of MedGPT's knowledge

For this project I chose two sources of data: A book called “ Handbook of Signs & Symptoms ” by Lippincott Williams & Wilkins and a dataset of medical forum question and answers. I chose these in particular because they not only had information of useful medical knowledge, but it also had advice on what questions to ask the patient. This allowed the chatbot to figure out what follow up questions it needed to ask in order to get a more complete picture about their symptoms and provide a more concise answer.

This data is processed into something called a vector database. There are many different solutions for efficiently querying large amounts of embeddings. These are called vector databases, and have the ability to process many embeddings at once very quickly. There are many different options, but I chose to go for the open source and free Chroma database. All of the raw text from the textbook and forum are turned into embeddings and stored for use by MedGPT.

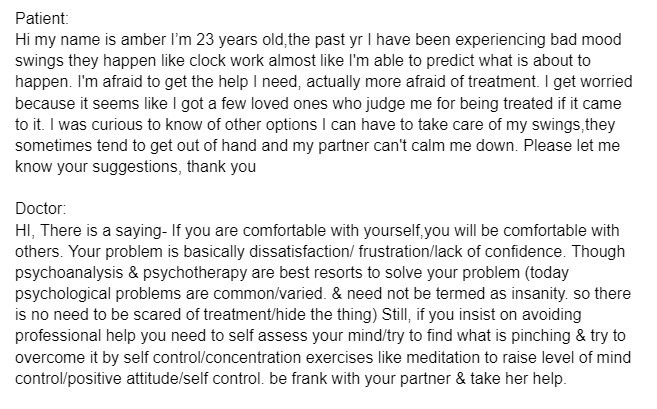

An example of data from the dataset

Prompt Engineering

Prompt engineering is crucial to how well a LLM performs at a given task. It is basically how you word the prompt that you pass onto the LLM. A bad prompt can lead to bad responses, such as hallucination, made up information, and other side effects. MedGPT has a prompt specifically made to minimize this. Here is the prompt that MedGPT uses:

“You are a doctor that is talking to the patient in order to find the cause of their symptoms and how to help them. You will be given a passage from a medical textbook for advice on what to say and ask the user based on their query. Make sure you ask for more information if you need it to answer the question. If you are absolutely unsure of the question, say ‘I don't know.’ Make sure you provide concise information.”

This prompt has several key features to improve the potency of the model. 1) It establishes the purpose of the AI by defining what it does in the first sentence. 2) It specifies what data it will be getting so that it will actually use it to answer questions. 3) It makes sure that the chatbot will continue to ask questions about the patient’s issues to emulate a real doctor-patient conversation. 4) It avoids hallucinations by ensuring that if the AI doesn’t know something, it will just say so rather than try to make it up.

The Result

By combining these things using OpenAI’s Chat Completion API, I get an experience similar to actually talking to a doctor. It can pinpoint various diseases and give recommendations on what to do about your symptoms. The code can be found on my github.

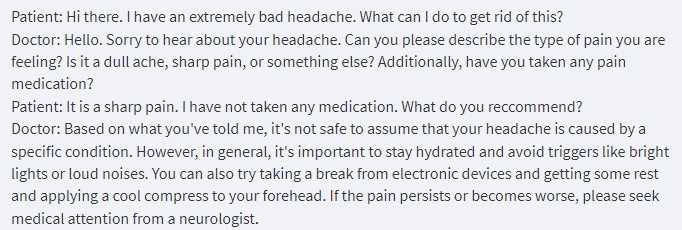

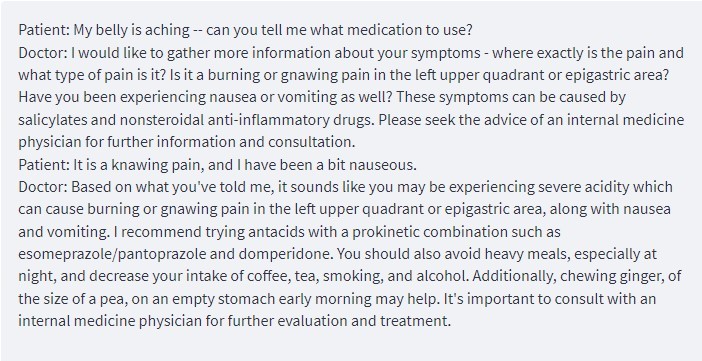

Examples of MedGPT at work

MedGPT vs ChatGPT

MedGPT uses ChatGPT under the hood, but due to embeddings, prompting, and other tricks it behaves much differently. If you ask for the same medical advice from MedGPT and ChatGPT they will answer differently.

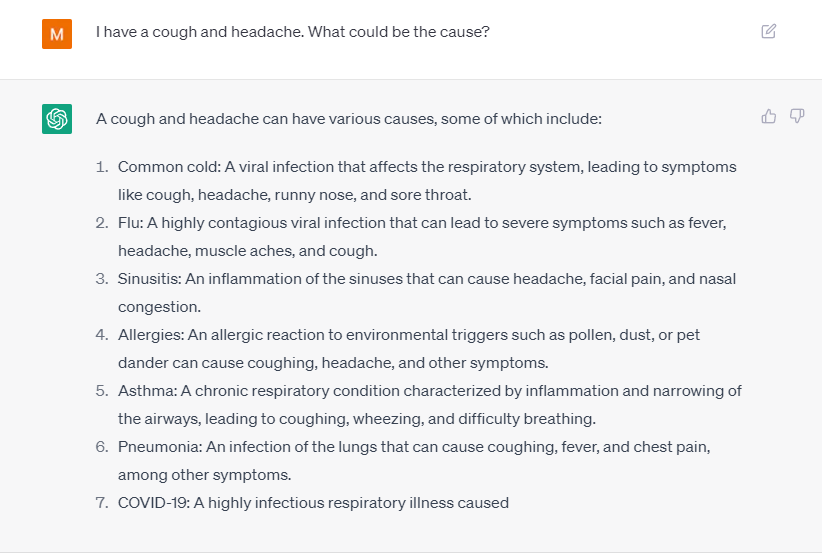

Comparison of ChatGPT and MedGPT

One of the major differences is the flow of conversation. ChatGPT acts much more robotic and is more like a question-answering machine, while MedGPT tries to emulate a conversation and asks questions which could help find the cause rather than just being general. Specifically, MedGPT will ask for other symptoms, what medication you're taking, or if you have any other known disease if necessary.

Another difference is credibility. MedGPT is supplied with credible sources, however ChatGPT may hallucinate or make up false information since it doesn’t have the sources backing it. One could argue that with OpenAI’s new plugin feature, ChatGPT can access the web therefore find credible sources, but the issue with that is that now ChatGPT is no better than a human doing their own research: It can only view one source at a time. All it adds is a little less reading and a lot more waiting for the user. MedGPT on the other hand can sift through an entire database of medical knowledge and pick out the best pieces of information it can exploit to give you the best answer possible.

Finally, when faced with something that neither ChatGPT or MedGPT can solve, ChatGPT likes to guess what it is rather than just saying it doesn’t know. Admittedly, it does sometimes guess right while MedGPT is inconclusive, however it also gets it wrong. Getting a diagnosis wrong, even ten percent of the time, is arguably worse than not getting anything at all. The risk associated with AI goes down while using MedGPT.

However, MedGPT is not strictly better than ChatGPT. For one, it had much larger wait times on both startup as well as when it was running. The issue is that it takes time to load in and search through all of the data it has. Also, there have been a few cases where MedGPT gets confused with the data given or refuses to give a medication recommendation.

Conclusion

In conclusion, MedGPT has taken ChatGPT to the next level when it comes to diagnosis, medicine, and conversation. As time goes on, AI will continue to become more and more advanced, improving or even replacing existing technologies. And despite the many ethical controversies surrounding AI, MedGPT is a prime example that AI can be extremely beneficial to humankind as a whole.